How Much Has ChatGPT Learned After Five Months?

ChatGPT, a popular AI chatbot by OpenAI, has seen significant improvement since its release.

Five months ago, artificial intelligence (AI) startup OpenAI took the internet and education system by storm with the public release of ChatGPT. The chatbot is backed by the large language model GPT-3.5, which can respond to vast requests, ranging from reporting back information from across the internet to writing essays, acing the bar exam, and scoring fives on many AP tests.

With such a revolutionary technology made so readily available, institutions and individuals have raised questions about whether the system would be the death of the college essay or even start replacing jobs, and if these replacements would require a complete reworking of curricula. The answer to many of these questions five months ago was, “Not yet.” But, many didn’t expect OpenAI to release the completely new model that has begun to give a glimpse of how close those futures may be only three months later.

GPT-4, GPT-3.5’s successor, is a completely new AI behind ChatGPT that is trained with significantly more data than its predecessor. Currently available to those willing to pay $20 per month for early access, the AI’s knowledge spans much further than the previous models and has achieved impressive scores on exams, ranging from the SAT to the Advanced Sommelier test.

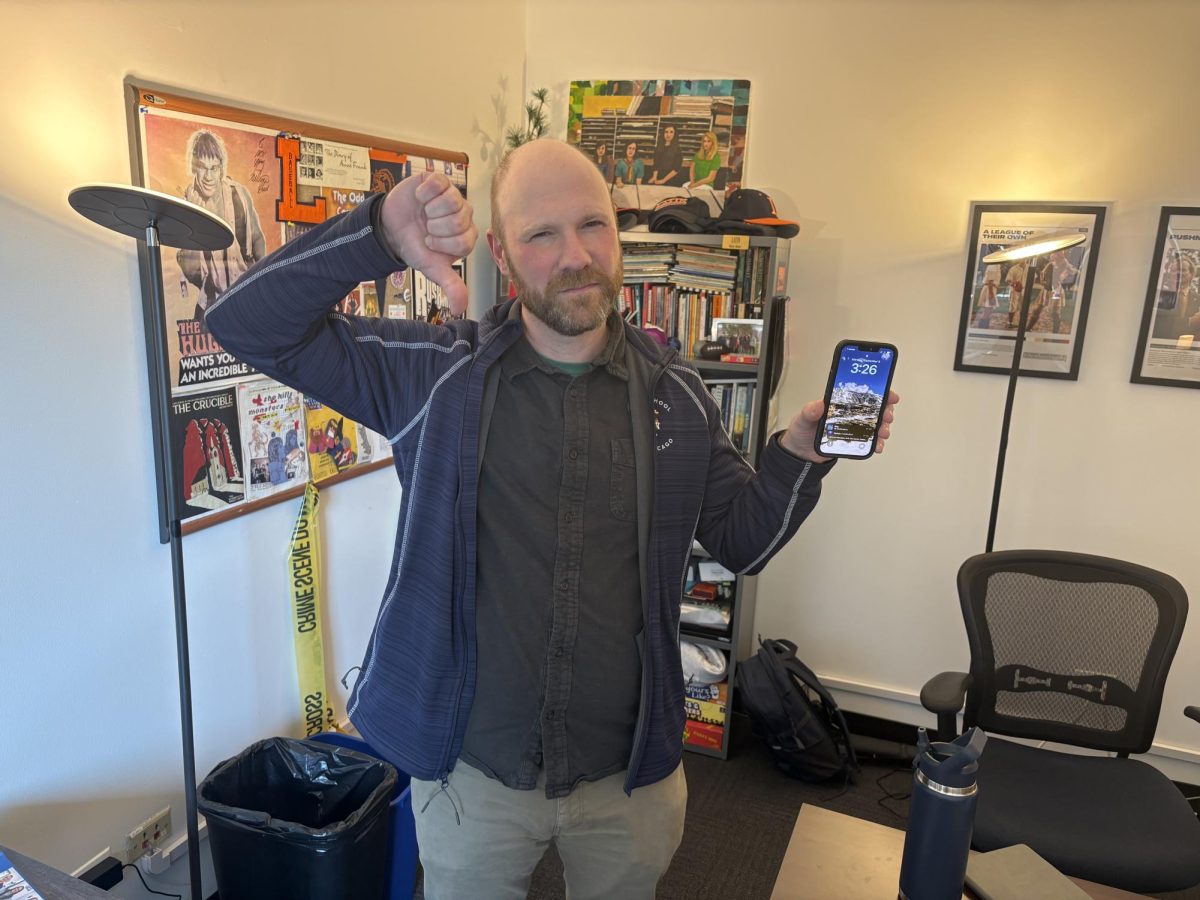

If ChatGPT were a student applying to college, it could have gone from the 70th percentile to the 89th in just one retake of the math section of the SAT. When tasked with analyzing how well it writes compared to the previous version, Jim Joyce, an Upper School English teacher who also runs Latin’s Writing Center, said that although it still isn’t at the point to craft a perfect essay, “I see how they could get there because even though this is not useful to me, it’s really impressive. It’s [just] not yet getting the nuance.”

One of the major questions ChatGPT raised was how schools would adapt, especially considering that the AI could complete assignments in a matter of seconds as if they were written by students. At first, teachers had the benefit of AI writing detection technology, but now, with the release of GPT-4, it is evident that fully accurate detectability may never be achieved at the rate the AI is improving.

Lenny Goldman, a US English teacher who is “intrigued by the implications of AI,” acknowledges that students will explore the program as an added tool for helping with the writing process in an appropriate way. When prompted about GPT-4 producing increasingly more sophisticated writing, he noted that still, for “the important learning—and this aligns with our work in standard-based grading as well—you’re only going to master the skills and the content if you are willing to put in the effort and embrace the struggle, and learn and grow.”

Echoing the understanding of many educators that ChatGPT will, in some ways, change learning, Mr. Goldman said, “it’s just pushing our thinking as educators [about] what we value, what we assess, and we have a lot of thinking to do.” However, with many tech companies rushing to find out how to best take advantage of this AI, educators may have the added pressure of learning about and adapting to it before its next major revelation.

For example, Google recently released its own AI model to the public, Bard, although it hasn’t gained nearly as much attention as ChatGPT. Google has also teased AI products that will provide tailored writing help in Google Docs and Gmail. OpenAI is even creating plugins for ChatGPT that can give the AI new abilities, like searching the web for up-to-date information, or connecting with other platforms like Wolfram Alpha, a mathematical computing tool.

Whether large language models like ChatGPT are about to reach a plateau is still unknown and also depends on what they are being used for. Many people use ChatGPT in ways that do not require attention to the technical details that could be improved, like freshman Clark Scroggins, who shares, “I like it when it gives me funny answers about stuff.” If the AI’s writing skills are used as a measure of how well the AI performs overall at replicating human intelligence, however, it definitely has room to grow.

Freshman Marc Abrahams said that AI is “just not genuine. You can make it sound a bit more realistic and a bit more genuine, but it’s always a bit off. You can tell this is written by a machine.”

Although the seemingly magical artificial intelligence is based around the concept of predicting what comes next based on previous examples, it’s still unclear if, in practice, there will be a distinction between human creativity and so-called AI creativity, at least in the near future. After all, both humans and AI models are just applying their experiences and knowledge from the world—or in ChatGPT’s case, from its data.

In describing how ChatGPT might affect humanities studies worldwide if used improperly—and the newer versions are making this increasingly more possible—Marc noted, “It would completely devalue the education of humanities, which is one of the most important subjects since it’s so important to culture. There would be moral conflicts.”

With fears of artificial general intelligence (AGI)—an AI that perfectly replicates human intelligence and can act on its thoughts without human oversight—being seemingly just around the corner, many notable figures in tech, including Elon Musk and Steve Wozniak, have co-signed an open letter proposing a stop to AI development past GPT-4 for six months, arguing that “powerful AI systems should be developed only once we are confident that their effects will be positive and their risks will be manageable. Should we risk loss of control of our civilization?”

Junior Josh Goldhaber wondered, “At some point, do we run out of information to train the AI on?” He added, “Can they only be as smart as the human race?”

Whether today’s AIs will be similar to the ones available in a year, it is clear that the world has only seen a sliver of the applications to come for this technology. Artificial intelligence has the potential to change many aspects of the world, including accessibility, and it’s up to everyone to make sure it’s used in a way that benefits humanity instead of replacing it. As Mr. Goldman said, “At some point, we all realize that there are times to take shortcuts and there are times that that doesn’t pay off.”

Teddy Lampert (’26) is excited to be serving as the Digital Editor-in-Chief for his fourth year on the Forum staff. He hopes to continue his work from...