Latin in the Age of ChatGPT: How Do We Learn with AI?

ChatGPT, an AI recently released by OpenAI, can communicate in several languages.

ChatGPT, a recent Artificial Intelligence (AI) innovation, has left students and educators alike trekking through terrain they have never navigated before. The chatbot—which can generate personalized writing and has already been shown to score higher than the median on SAT exams—has seemingly taken the media and the global education system by storm. Many have taken to arguing that its peppy tone and polished writing conceal a weapon of mass educational destruction.

For some context, ChatGPT, a large language model developed by OpenAI, is being considered as a tool for improving the learning process in educational institutions. However, experts have raised concerns about the negative effects it may have on critical thinking and creativity among students. While the capabilities of ChatGPT in understanding natural language are undeniable, it is important to consider the drawbacks before fully embracing its integration into the classroom. The ultimate decision on the integration of ChatGPT in education remains to be seen—whether it will prove to be a valuable asset or lead to negative consequences.

Just in case you’re still dubious of its writing skills, ChatGPT crafted that entire paragraph, albeit in about 15 drafts.

The AI that has caused this academic ruckus was released last November. It’s built off of a language model—a type of AI that can predict word usage—called GPT-3.5 and was adapted for use as a conversational chatbot. Essentially, a user can prompt the AI with a question and get a response to which they can suggest edits, allowing for personalized writing.

However, with this innovation comes quite a few concerns. For one, ChatGPT has more than its fair share of biases, and in the wrong hands, could be a means to a malicious end. In the same vein, many educators are concerned that students are using the technology to cheat, and some school districts have already banned access to the resource.

As for its biases, ChatGPT was trained on human-made and selected data. This means that the program is subject to having implicit bias. As Computer Science Department Chair Ash Hansberry put it, “If you look at tech companies, they are historically not the most diverse places; it’s mostly young-ish, wealthy, college-educated, white men—mostly. They’re getting better, and [for] some companies that’s not true, but overall [tech company employees] are mostly one type of person, so the data they feed it is bound to be biased, just because that’s how humans work when you’re all the same type of human.”

Even though OpenAI, the company that launched ChatGPT, includes in its statement of values that it will not produce AIs that “harm humanity or unduly concentrate power,” the guardrails put in place to mitigate ChatGPT’s bias simply cannot cover all of the subtle ways in which the application could display prejudice. Mx. Hansberry said, “[OpenAI] explicitly told it ‘if someone asks how to do something illegal, don’t tell them. If somebody uses a slur, don’t accept it,’ but they’re humans, and often people working on tech is not the most diverse group of humans to begin with.” They continued, “Whatever they thought of is all that it knows.”

This loophole can be exploited in more ways than just producing biased material; it can sanction illicit activity. Early versions of ChatGPT, for example, would decline to meaningfully answer a user who asked how to rob a bank but would comply with requests to write a one-act play on how to rob a bank.

Part of the problem is that AI is a sort of black box. “How the AI gives you what it gives you is a mystery,” Mx. Hansberry said. “No human has the time to trace back the millions of steps of data crunching and layers upon layers upon layers of probability to know how it got that.” In essence, AIs like ChatGPT look for patterns—so many patterns that humans cannot understand the complicated path from an input to its output.

As many major journalistic organizations are realizing, all of these concerns are playing out across stages in education, with some sources, like The Atlantic, hailing ChatGPT as the death of the college essay, and others calling it a revolutionary new tool.

The dangers of permitting ChatGPT in a classroom environment are plentiful. Because the AI risks spreading misinformation, many people, as Mx. Hansberry said, “don’t have the tools they need to evaluate if what the AI is giving them is a good thing or not.”

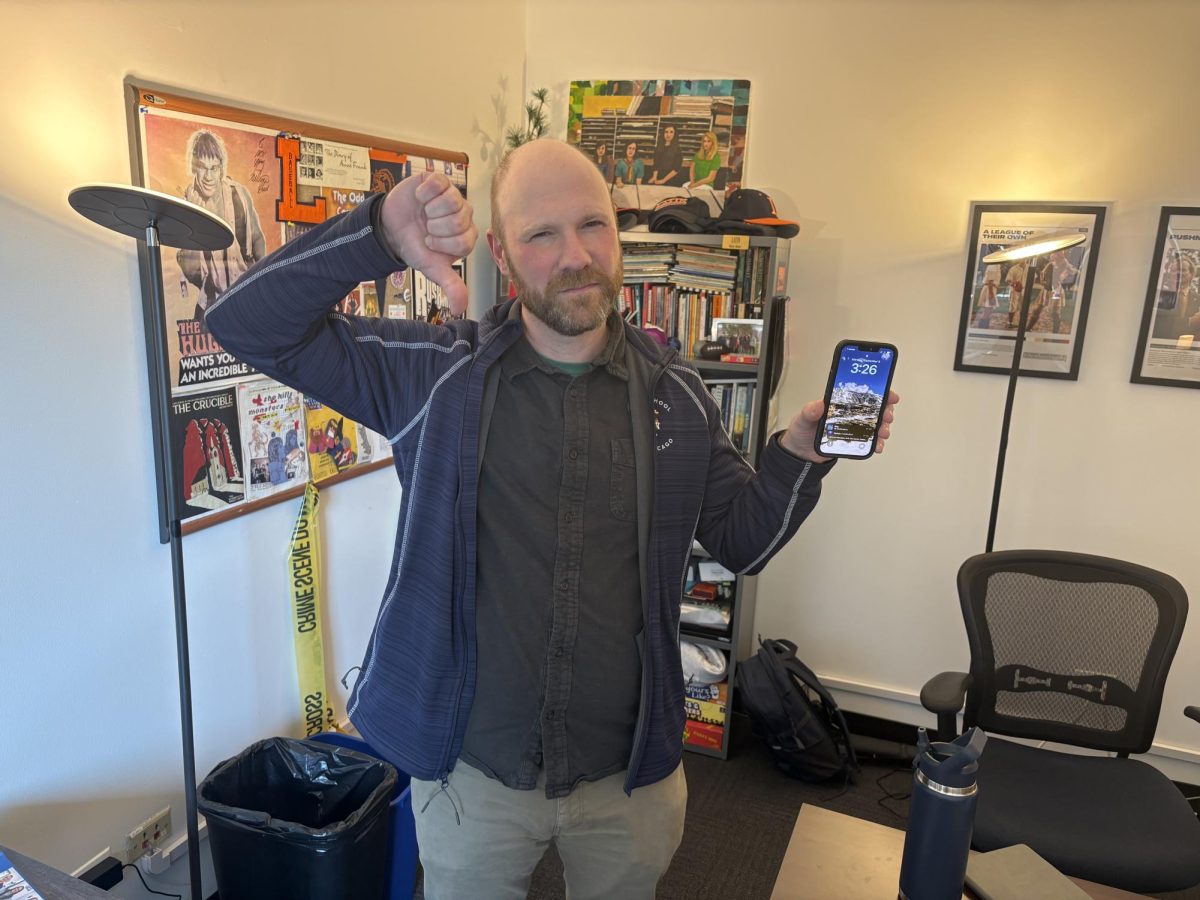

Upper School history teacher Greg Gaczol also has concerns about this new technology. “I’m concerned as a history teacher because a lot of what we do in history classes is we try to teach building skills that will lead students to analyze things on their own, come to their own interpretations, [and] understand multiple perspectives; a lot of these nuanced skills that are not easy to pick up and are not just Google-able. I’m worried that, at least from a writing perspective, what AI programs are doing is—I don’t even want to call it cheating right off the bat—but it’s shortcutting, and it’s taking away from the process of how to get to certain answers.”

Yet, ignoring ChatGPT comes with its respective dangers. As Mr. Gaczol said, “Students are looking for programs that help them come up with ideas or enhance their writing abilities and things of that nature, so we can’t just pretend it doesn’t exist.”

Upper School English teacher Brandon Woods said, “We can’t stop people from using it, but just like a calculator, just like the Grammarlys of the world, we have to figure out how we incorporate this into our instruction. [That] might mean more in-class writing, just so we get the sense of an authentic piece of writing from a student. That might change our assessments [or] our daily workflow.”

However, chances are incorporating this technology into the classroom will be quite the process. As freshman Ralu Nzelibe said, “I think it’s got a long way to go before it’s reliable enough that teachers like using it in classrooms.”

Mr. Woods said, “We have to feel really confident in the way we’re using standards-based assessment, then we can start thinking about ‘how can we incorporate this new tool?’” The evolution of standards-based grading has been its own journey at Latin; the implementation of AIs like ChatGPT into Latin’s curriculum could be similarly complicated.

And yet, with enough time and collaboration, learning to navigate ChatGPT could be a part of education in the future. Mr. Gaczol said, “You can give anybody a particular tool, and if they don’t know how to use it, that tool ends up being worthless.” The challenge, then, is finding a way to teach students to analytically and appropriately use ChatGPT.

Students and educators, however, are still in the process of addressing that challenge, meaning the liminal period between the release of this technology and its integration into the classroom hasn’t yet ended. As Mr. Woods said, “This is a new tool that’s going to make writing look very different than in the past.”

Scarlet Gitelson (‘26) is delighted to be serving as one of this year’s Editors-in-Chief. Using her writing, she seeks to promote connection and discourse...